Project Description

The client deals with a huge volume of data that is updated daily.

The CSV contains 1,50,000 rows of pricing data which have to be uploaded to the server daily. It includes the names of the supplier and the pricing among other columns.

Original Process

- Textual data is converted into numerical data. For example, the names of the suppliers in text format are converted into numerical ids.

- New pricing data is entered every day. During CSV upload it needs to be ensured that duplicate suppliers and pricing data are not added.

- The supplier data which doesn’t change has to be kept intact.

Challenge

- The previous system took 20 to 30 minutes to upload the CSV file to the system

- Converting the textual data into numerical format was an issue.

- Processing the data to see which data needs to be replaced in the old file and which ones need to be deleted after 3 days was complicated and tough.

- The complete client architecture runs on a server with 1GB RAM, so the processing speed had to be fast otherwise it will take up all the server capacity and the rest of the system will fail.

Benefit Of Our Solution

- Reduce the time taken from 20-30 minutes to 30-40 seconds. That’s close to a 33% reduction in time taken.

- We ensured that the platform is available for users while the data is being uploaded.

Optimized Process Established by SynergyTop’s Product Engineering Team

- To begin with, It was not possible to reduce the processing time on a 1GB RAM server so the server was upgraded to a 4GB RAM.

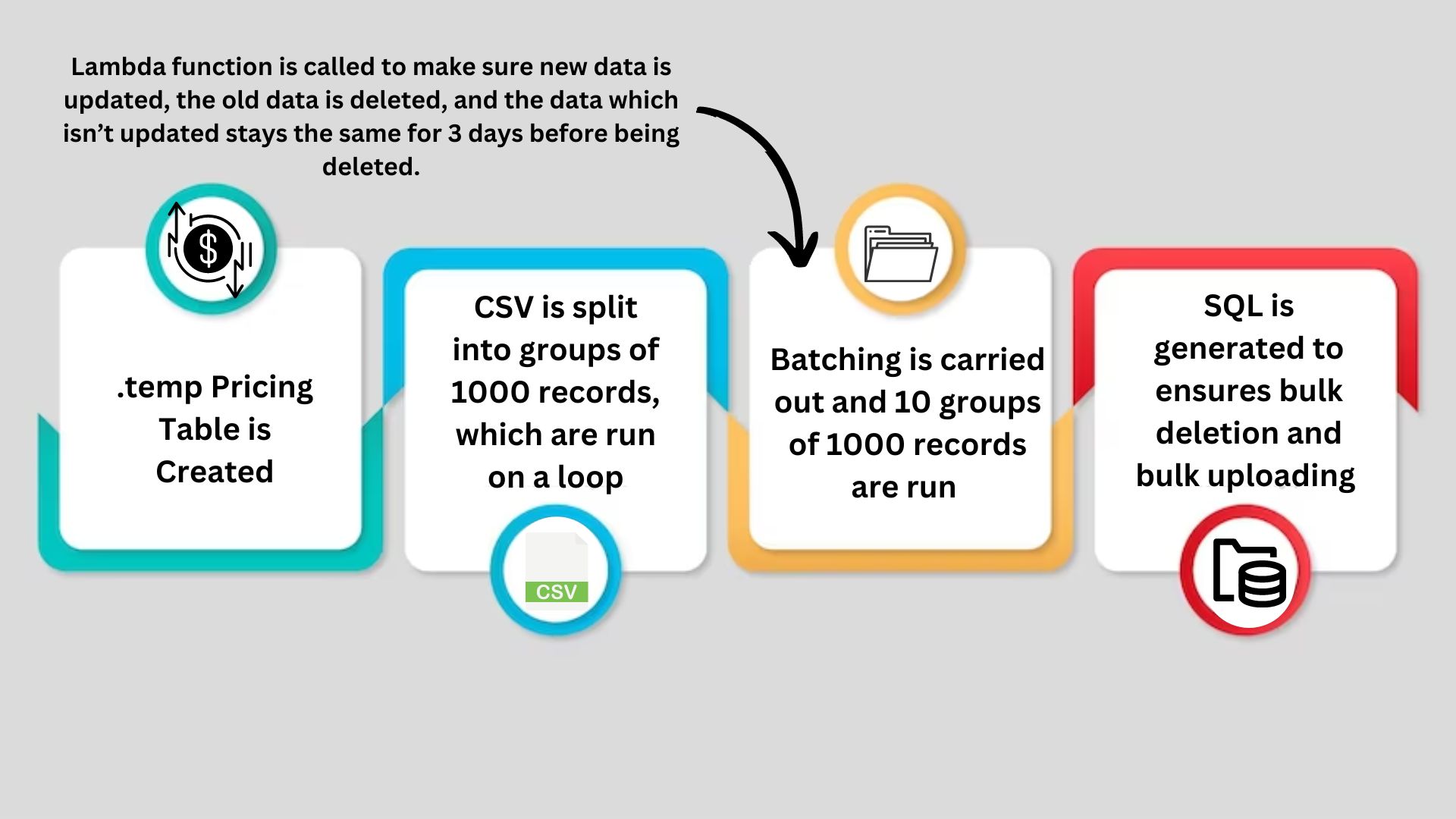

- The data was originally stored in the “Pricing Table” A .temp pricing table was now created to ensure that the unwanted data gets deleted after 3 days.

- The CSV was split into groups of 1000 records which are run on a loop. The data is uniquely mapped and thus ensures no duplication of records.

- After the first loop, batching is carried out and groups of 1000 records are run in batches of 10 files. During this process, the Lambda function is called which ensures new data is updated quickly and as required.

- Based on all this, an SQL is generated which ensures bulk deletion and bulk uploading of data with proper conversion and data processing.

Also, to ensure that data is available to the users at all times, while the data was uploaded in the backend, the old data was still kept available for the users on the front end. This helped make sure that at no time was the platform devoid of data. It was made possible with the use of another database. Data processing happens in a separate database called pricing_upload and once processing is completed the pricing table data is deleted and new data is copied from the pricing_upload database.

The Result

The time taken to upload the CSV was brought down to 30-40 seconds.

Looking for similar solutions? Contact our product engineering team to discuss your project requirements today!